Table of Contents

Gestureworks Core Internals

This is an archive for Gestureworks 1.

To learn about the new Gestureworks, see the Gestureworks 2 Wiki

Overview

GestureWorks Core is a comprehensive gesture processing library for Windows applications that leverages the efficiency of C++. This authoring environment enables developers to quickly and easily create gesture driven applications in many languages. The core library comes with bindings and examples for C++, .NET, Java and Python but users are encouraged to create their own bindings in any language that suits their needs.

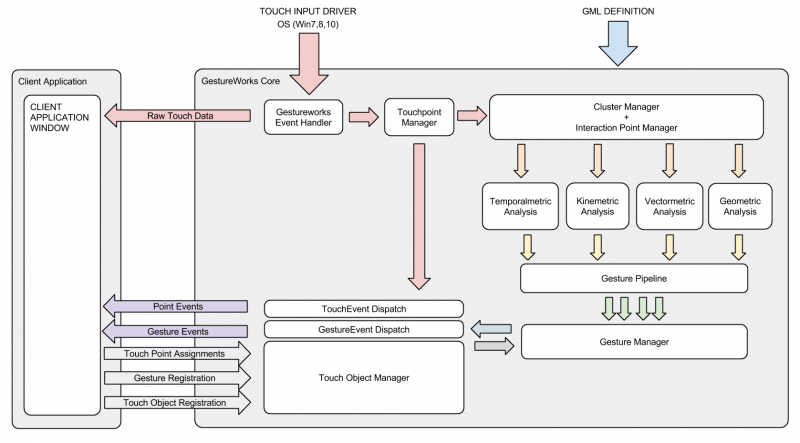

The library works by clustering touch points intercepted at the operating system level and performs a complex series of analyses in the “Gestureworks Core” which results in object targeted gesture events being passed to the application layer. The application can then direct the what happens with the event data.

The gesture analysis performed in the the core is a multi-step process which is collectively called the “gesture processing”. The following segments outline this process in more detail.

Gestureworks Event Handler

Gestureworks Core creates an abstracted input model so that touch, motion and general HCI input can be analyzed for gestures and passed to the application layer via a standard binding. This opens developers to create GML for applications in a device agnostic manner so that they are free to define a completely natural UX that operates as expected regardless of the input device. A critical step in abstracting the input data is normalizing device input into a a set of virtual interaction points regardless of weather integrating devices directly use touch interaction to generate “touch” points. In this respect Gestureworks Core documentation uses the phrase “touch point” in a abstract sense and should more accurately be read as “virtual interaction point”.

Touchpoint and Cluster Manager

The first step in the process is the direct association of interactive touch points with registered interactive objects in the application. Touch point groups called “clusters” are created for each registered interactive object (the “owner” or “target” of the touch points). This is done using a hit-test function which built into the application binding layer.

GML Definitions

The processing of a frame in Gestureworks involves fundamentally characterizing the behavior of the touch points in each object cluster, then matching these behaviors against gestures that have also been registered to the interactive object. Gestures can have very different matching criteria and use a variety of different analysis methods to characterize changes in the state of clusters. This detailed criteria is directly defined in the GML gesture associated with application and the object. GML definitions can be used to define every detail of a gesture: from the number of touch points, the type of motion, return values, applied numerical filters, value limits, target properties to how and when the gesture event is dispatched. This can be modified without having to make any changes to the application itself (as is XML based and external to the app) and so removes the need to recompile the application when developing gesture interactions.

Cluster Analysis

Cluster analysis generally consists of one of four metrics which provide a series of configurable calculations that measure specific fundamental dynamic cluster properties: Kinemetric, Temporalmetric,Vectormetric and Geometric analysis (there are some occasions where more than one metric can be used). These collections of algorithms generate the raw cluster data which is then passed into the gesture pipeline.

Gesture Pipeline

The cluster data is further processed through the gesture pipeline which consists of a series of filters that act to modify the delta values. For example the “mean” filter samples a set of delta values that pass through the filter and returns the mean average of the values. More than one filter can be applied to data passing through the gesture pipeline. The effect of adding each new filter is cumulative. The final values that are returned from the gesture pipeline depend not only on the initial values and the applied filters but also the order in which the filters are arranged.

Gesture Manager

Once the gesture data has passed through the gesture pipeline it is organized and packaged in the gesture manager. The data structure is then sent for dispatching.

Gesture Event Dispatch

The Gesture event dispatch received qualified gesture data structures and actively manages when and how a gesture event is dispatched to the application layer. For example some events are “continuous” and others can be “discrete”. Continuous gesture events that have qualified for dispatch are propagated to the application layer upon each processed frame. Discrete gesture events are dispatched on a predefined interval or one time per gesture action.

Summary Example

For example: A “2-finger-drag” gesture, will only dispatch a gesture event if two touch points are moving by a critical threshold amount. When the gesture is returned the change in position placed in the event data structure. To achieve this Gestureworks Core is internally configured (using the “2-finger-drag” GML) so that the initial matching criteria requires only two touch points in an object cluster. Using the kinemetric the translation of the touch points in the cluster is continuously calculated and passes into the gesture pipeline. These delta values are then checked to see if they match the threshold conditions. If they fall between the correct limits the gesture data is then packaged into a gesture event object and dispatched to the application layer. Upon receiving the gesture event the application can then use the returned dx and dy values to update the position of the relevant display item so that it appears to be dragged.

For more details of gesture types using different metrics see the GestureML wiki:

Also, for more information about Gesture Markup Language, GestureML, see GestureML.org

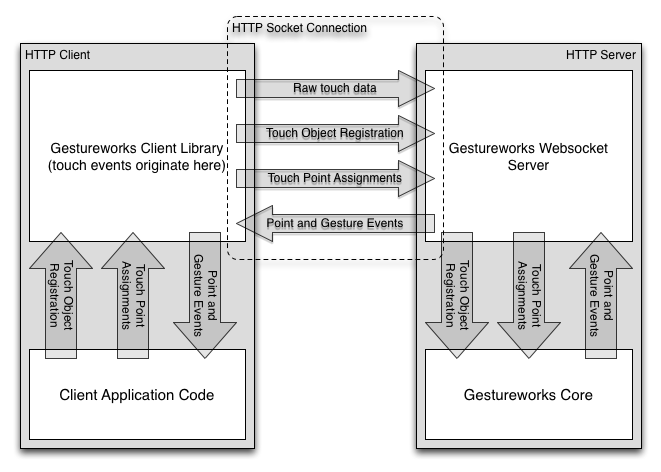

Websocket Internals Overview

The internal structure of the HTML server is almost identical to the standard Gestureworks Core install described above with Gestureworks Websocket Server taking the role of the client application. The only change is that the Gestureworks Event Handler is disabled and raw touch data is provided by the client.

The Websocket Server and Client Library maintan a persistent HTTP websocket connection using the Socket.IO library.

Touch events are captured by the browser and exposed to the system through the native Javascript API. The Client subscribes to these events and uses them to generate touch events which it publishes to the server once per animation frame (up to 60Hz). In order to minimize network traffic, it discards intermediate touch events and only sends the most recent event seen for each finger.

The server contains a 60Hz event loop, during which it will process touch events as specified above and publish raw point and gesture events to the client. The client receives these events and provides EventEmitter style subscription handles.

Additionally, the client library provides thin proxies for touch object and gesture recognition, as well as for touch point assignment. These simply serialize their arguments and transmit them to the server to be processed.